Have you been through one of those terrifying moments when you do not have Wi-Fi. If so, then you have realized just how much of what you do on your computer relies on the net. Out of sheer habit, you will find yourself checking your emails, viewing your friend's Instagram photos as well as reading their tweets.

Since so much computer work involves the web processes, it would be very convenient if your programs could get online as well. This is the case for web scraping. It involves using a program to download and process content from the web. For instance, Google uses a variety of scraping programs to index web pages for their search engine.

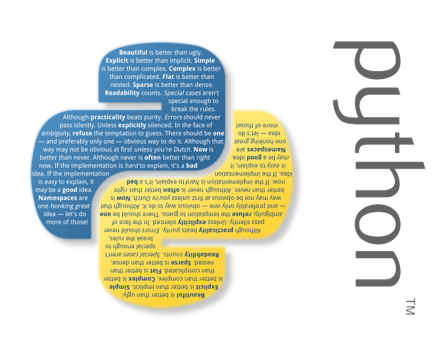

There are many ways in which you can scrape data from the internet. Many of these methods require the command of a variety of programming languages such as Python and R. For instance, with Python, you can make use of a number of modules such as Requests, Beautiful soup, Webbrowser, and Selenium.

The 'Requests' module allows you the chance to download files easily from the web without having to worry yourself about difficult issues such as connection problems, network errors and data compression. It does not necessarily come with Python, and so you will have to install it first.

The module was developed because Python's 'urllib2' module has many complications making it difficult to use. It is actually quite easy to install. All you have to do is run pip install requests from the command line. You then need to do a simple test to ensure that the module has installed correctly. To do so, you can type '>>>import requests' into the interactive shell. If no error messages show up, then the install was successful.

To download a page, you need to initiate the 'requests.get ()' function. The function takes a string of a URL to download and then returns a 'response' object. This contains the response the web server returned for your request. If your request succeeds, then the downloaded web page is saved as a string in the response objects text variable.

The response object usually has a status code attribute that you can use to find out whether your download was successful. Similarly, you can call the 'raise_for_status ()' method on a response object. This raises an exception if there occurred any errors downloading the file. It is a great way to make sure that a program stops in the occurrence of a bad download.

From here, you may save your downloaded web file on your hard drive using the standard functions, 'open ()' and 'write ()'. However, in order to retain the Unicode encoding of the text, you will have to substitute text data with binary data.

To write the data to a file, you can utilize a 'for' loop with 'iter_content ()' method. This method returns bulks of data on each iteration through the loop. Each bulk is in bytes, and you have to specify how many bytes each bulk will contain. Once you are done writing, call 'close ()' so as to close the file, and your job is now over.

Post a comment